Through artificial intelligence software known as Deepfake programs, people can produce believable counterfeit video and audio content that affects the entertainment sector’s political processes and cybersecurity systems. The technology exists for filmmakers’ creative needs, but its misuse for false information distribution continues. The crypto market featured 88% of all deepfake fraud incidents as it experienced a ten-fold growth during the transition from 2022 to 2023. The technology enables novel effects through digital age adjustments for actors while introducing serious ethical problems that include deception and false content distribution. Deepfake tool accessibility requires immediate attention and regulation alongside proper use standards to prevent misuse.

This article examines how deepfake software impacts digital content, media, and online security. It discusses creative uses in filmmaking, gaming, and advertising. It also points out ethical issues like misinformation, fraud, and privacy violations. These concerns highlight the need for awareness across various industries.

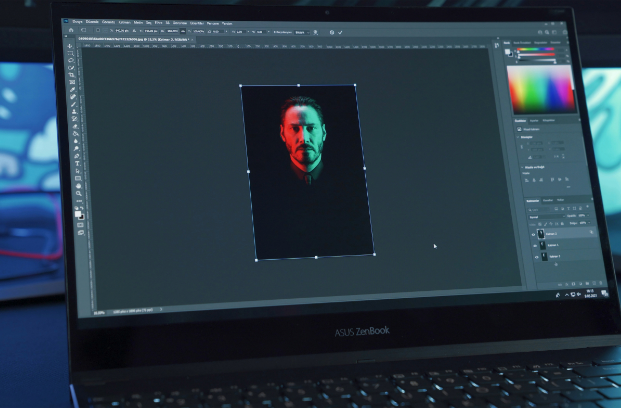

The Evolution of Deepfake Software

The evolution of deepfake software has led to significant improvements that turned basic animation outputs into hyperrealistic digital images. Previous versions of the technology displayed awkward facial expressions and lighting problems, but modern AI improvements have corrected these initial flaws. Artificial intelligence-based neural networks, together with machine learning systems, have achieved realistic results in generating face and voice synthesis along with expressive features.

Two AI models compete against each other within generative adversarial networks to produce more realistic outputs, whereas deep learning requires examining large datasets to replicate human conduct. A facial recording system gathers detailed information about facial expressions and skin structures at the same time as voice re-creation, which duplicates genuine speech patterns. Because of this, deepfakes are more complex to detect.

The Rise of Deepfake Video Software

The media and entertainment sectors are significantly transforming through deepfake video programs. The media industry benefits from filmmaker capabilities to adjust actor ages and display historical personality representations and marketing capabilities to generate individualized promotional materials that feature authentic AI-based spokesperson models. Authors in the entertainment industry use deepfake detection solutions to create celebrity impersonations and pump up their narrative material. Audio correction without additional filming becomes possible for studios while video game developers employ facial swapping technology to improve virtual character behaviors. Through deepfake videos, social media influencers attract their platforms’ users to display how this technology changes visual storytelling and creativity.

The Role of AI Deepfake Software in Manipulating Reality

AI deepfake software simplifies creating real-looking false content by transforming digital videos and sounds and visual files to reproduce human beings within realistic boundaries. These impersonation tools need only a few minor voice or appearance examples to create perfect fake identities that experts cannot identify through basic methods. By using technological means scammers can visually impersonate executive staff as they communicate through video calling to fraudulently access business funds from companies without their knowledge.

Political organizations use deepfake technology to spread fake videos which demonstrate competitors saying or doing activities beyond their reality. Social media platforms distribute these artificially altered video segments as they spread throughout social networks thereby deceiving voters who ultimately decide election results based on fabricated information which affects how people understand the situation. Thieves in the cyber world have realized the potential of deepfake audio technology to generate faked voicemail recordings or phone calls which imitate bank managers and other trusted figures thereby obtaining private information from victims.

When an attacker displays a deepfake video of their world leader declaring false military operations or declaring false emergencies the result could produce global panic and subsequent international tensions. The emotional state of victims makes them vulnerable to scam attempts through fake family member phone calls pretending to need urgent wire transfers for fraudulent purposes. Malicious actors craft fake news videos which similarize format and design features of top broadcasting networks to increase false stories credibility thus speeding up their dissemination.

The increasing threats from deepfakes have now rendered a distinction between authentic events and fabricated ones significantly complicated. The technological advancements of deepfakes require immediate action by society to solve their important ethical and legal problems and security risks.

Combating Fakes with Deepfake Detection Software

The emergence of Artificial Intelligence generated content necessitates deepfake detection software to be considered essential. The tools conduct video examinations to detect facial movement abnormalities and lighting discrepancies while recognizing minor defects humans tend to overlook. DeepFaceLab, together with Microsoft’s Video Authenticator, uses analysis methods that detect digital manipulations through irregular blinking patterns and discrepancies between lips and facial expressions. Detecting deepfakes with software takes on crucial importance in the fight against trust violations while safeguarding against fraudulent information delivery.

The Future of Deepfake Software: Regulation and Ethical Concerns

The development of deepfake control legislation continues between both governments and private corporations. Governments across different nations consider either substantial legal consequences for deepfake cheating and disinformation cases or watermark implementation for tracking deepfake origin. Legislative efforts need to create safeguards that shield freedom of expression and artistic creation but simultaneously solve security matters.

The deepfakes technology creates an operational difficulty because it enables creative value generation and the construction of harmful fraudulent schemes. This development raises major ethical problems because it produces confusing boundaries between real and artificial information. AI development requires both firm regulations and responsible practices to mitigate risks that arise.

These developments raise serious ethical concerns about consent, privacy, authenticity, and accountability in the digital age. Addressing these issues requires not only well-crafted laws but also a cultural shift toward responsible AI development and digital literacy. Technology companies must implement safeguards and detection tools, while users need to develop critical media consumption habits. Ultimately, mitigating the risks of deepfakes calls for a multi-layered approach—one that includes regulation, education, transparency, and international cooperation—to ensure that AI innovation serves the public good without compromising truth or security.

Conclusion

Modern deepfake technology impacts how society handles media consumption as well as security frameworks and communication systems. The same technology enables artistic content development for marketing and films but simultaneously leads to fake content spread and fraudulent schemes. AI-based content will become standard across all mediums, so responsible innovation methods should be developed to control its widespread use. People need to understand deepfake recognition to fight its usage daily. Deepfake technological advancement will either promote truth or endanger it according to how future society handles its security and innovation balance.

Leave a Reply